Our focus this year has been to enhance the accuracy and robustness of our perception pipeline. By leveraging on the benefits of both deep-learning and traditional computer vision approaches, we aim to to improve our chances of localising the different obstacles in the TRANSDEC environment.

At the highest level, the user interacts with the Mission Planner Interface. The mission planner is responsible for orchestrating the task nodes which in turn interface with the vision pipeline, the navigation and control systems.

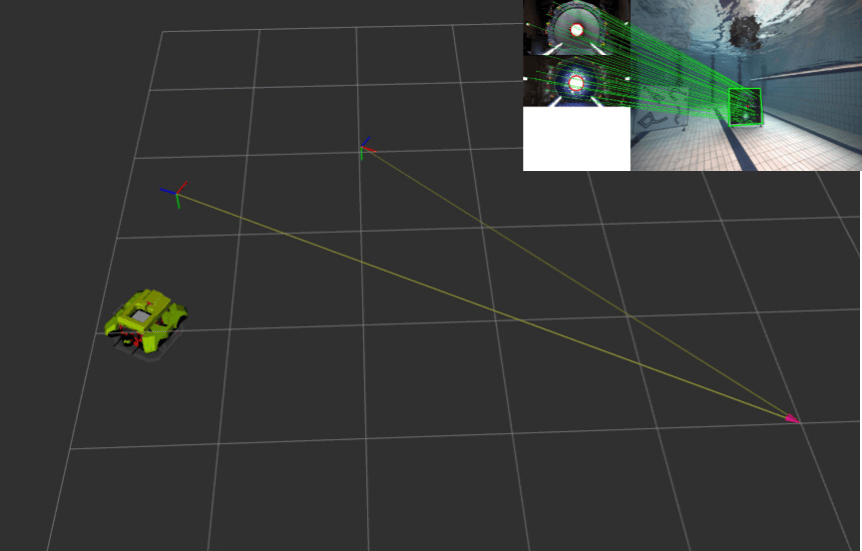

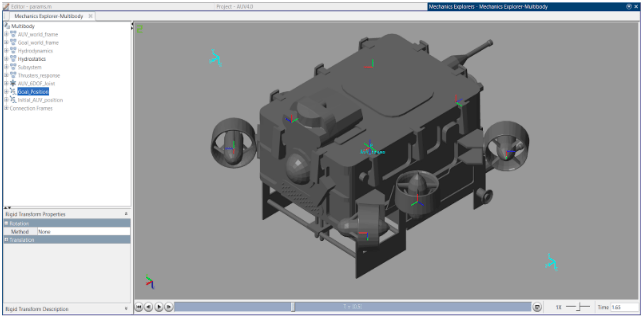

Simulation

We adopted an emphasis on software simulations as part of our test-driven software development cycle, using tools such as Gazebo and MatLab Simulink.

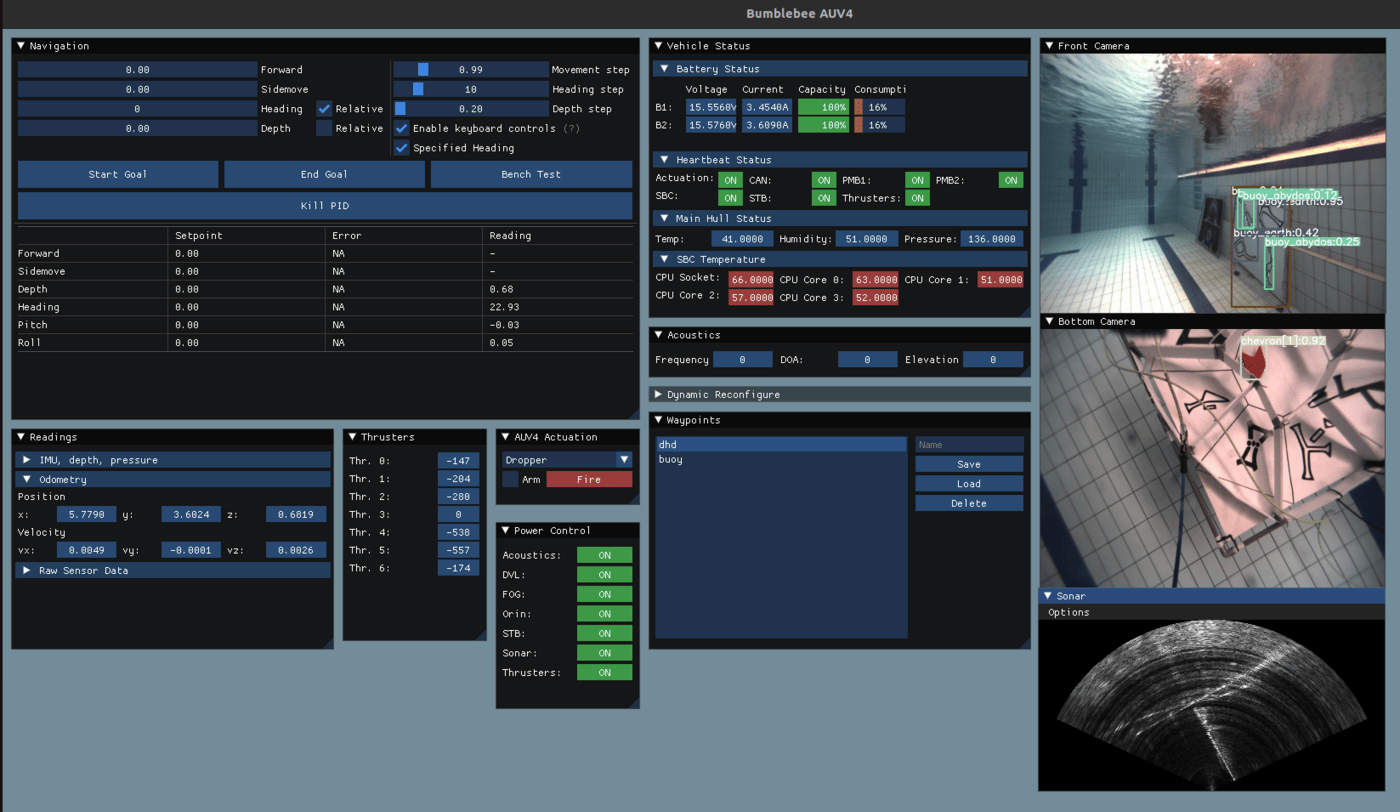

Control Panel Interface

The Bumblebee 4.1 Control Panel displays telemetry information, camera and sonar images, which aids in the monitoring of sensors and actuator data for system analysis during operational deployments when connected over the tether. The control panel is also used for control of the vehicle during teleoperation mode.

The software stack has a logging system which is used to capture telemetry and video information, and log messages during both tethered and autonomous operations. The software is capable of replaying logged data and streaming into the user interfaces allowing for post mortem analysis of the mission and evaluation of the collected data.

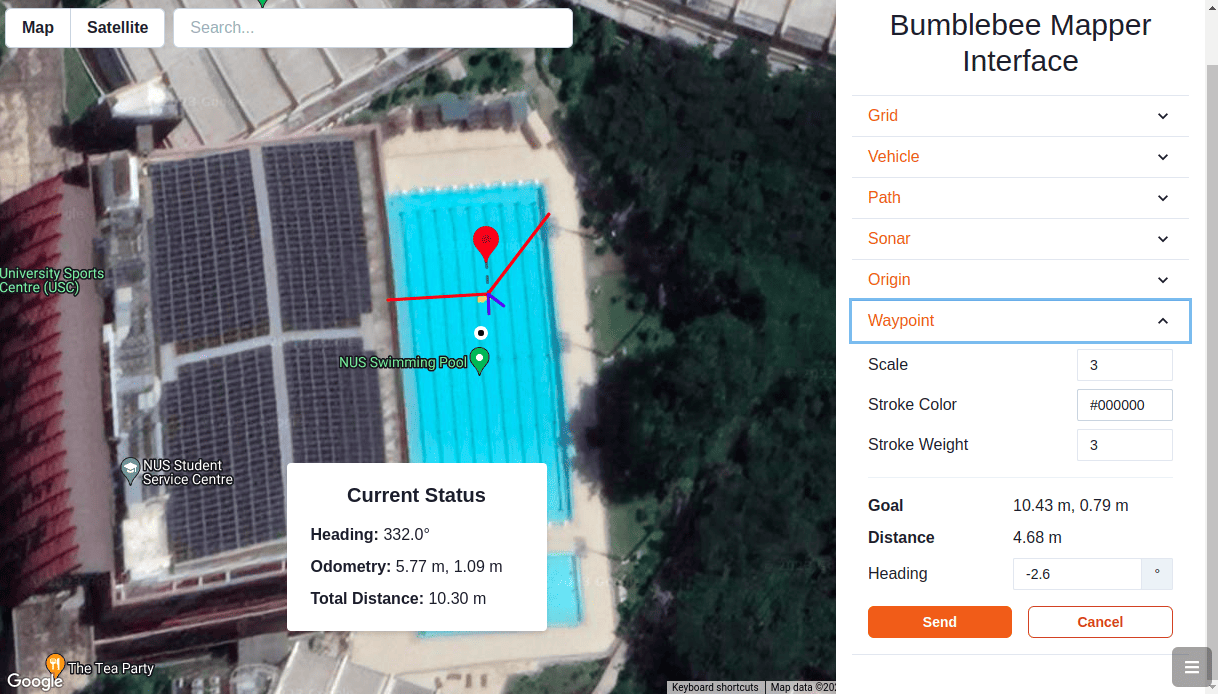

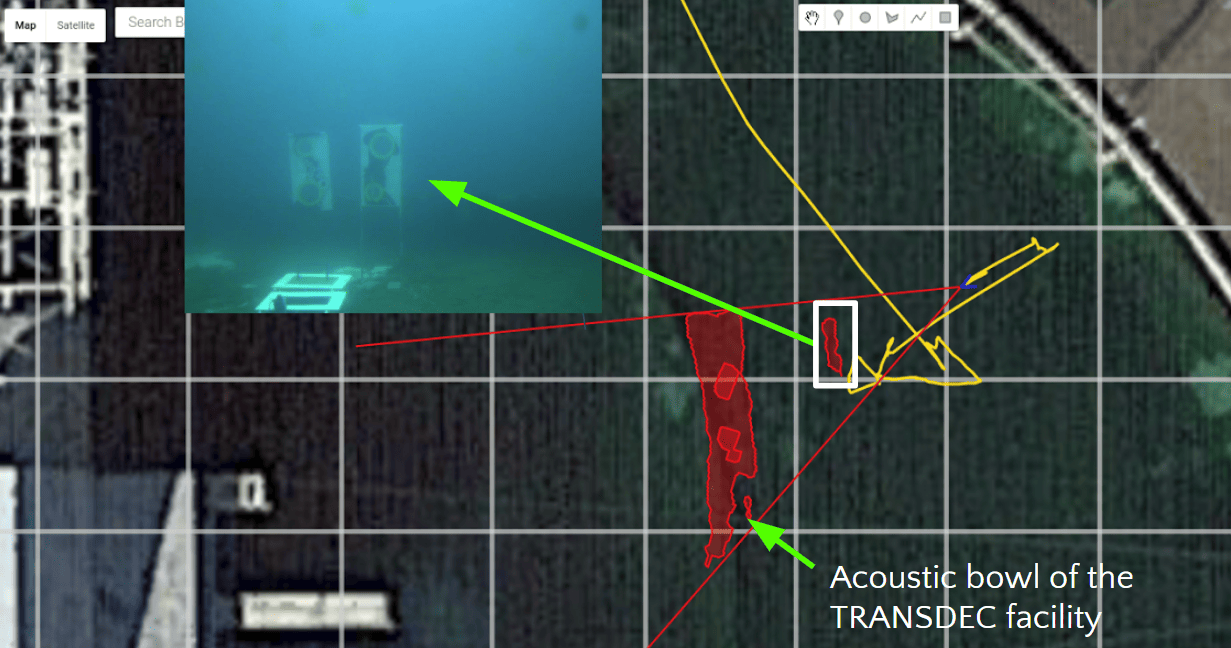

Mapping Interface

Apart from the control panel interface, the Bumblebee 4.1 vehicle has a mapping interface which enables point-and-click operation of the vehicle, moving it to the location indicated when used in tele-operation mode.

The mapping interface maps out key sonar objects in the area and projects it onto the mapping interface so that the operator can directly correlate its real world position with the actual coordinate of a certain sonar object.

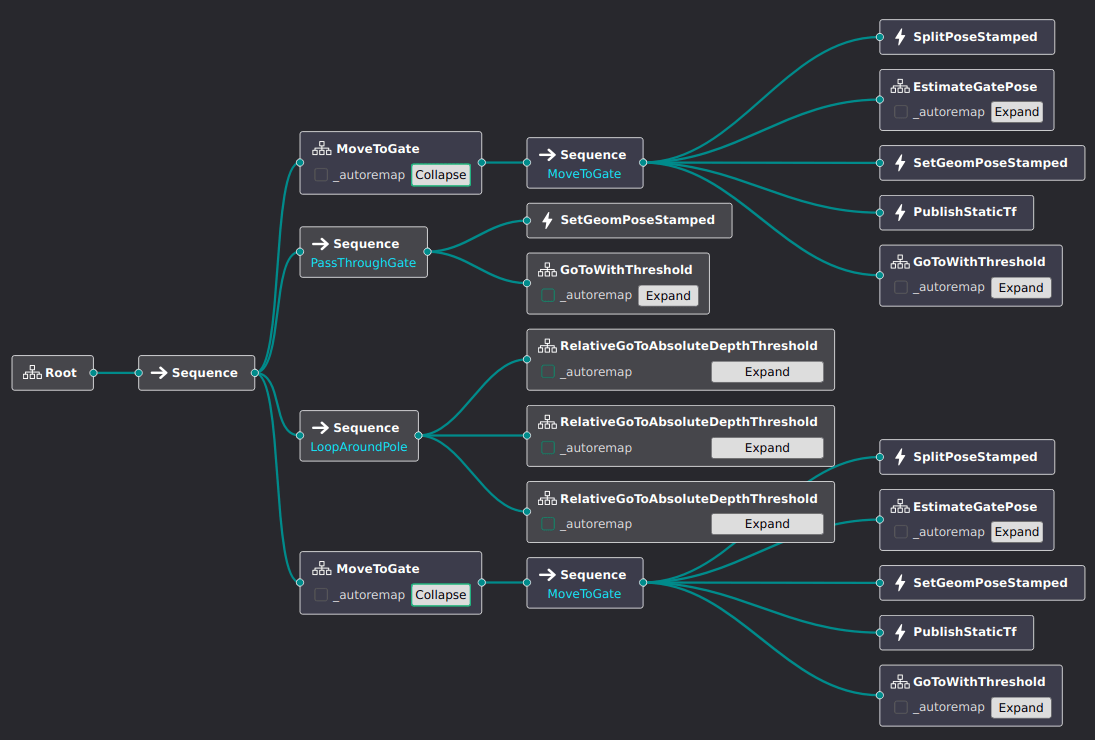

Mission Planner

The highly modular software architecture complements the functionality of the Behavior Tree (BT) based mission planner. We have enhanced our mission planner to take advantage of a Graphical User Interface (GUI), which facilitates easy design and modification of mission plans.

Furthermore, we have improved the reusability of our mission planners, enabling different tasks to reuse the same high-level plans and abstracting away unnecessary details, thereby simplifying the mission planning process.

Underwater Perception and Tracking

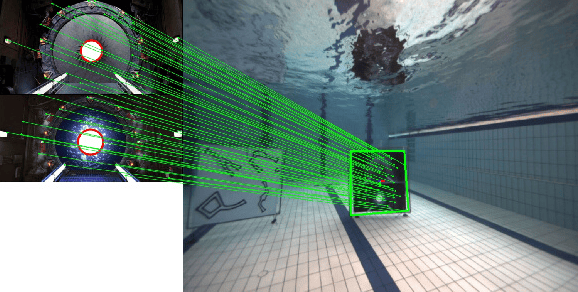

Image Matching

The upgraded pipeline incorporates deep-learning models such as SuperPoint and SuperGlue to directly estimate obstacle poses by matching image features from our camera against template images provided in training.

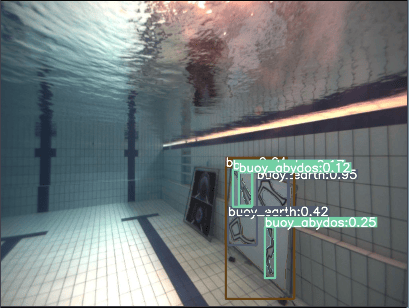

Object Detection and Segmentation

We also deploy object detection and segmentation models such as YOLOv8, and traditional computer vision algorithms like Scale-Invariant Feature Transform (SIFT) or Perspective-n-Point (PnP) to supplement the deep models. One of the advantages of using a hybrid approach is the ability to dynamically adjust our approach based on varied pool conditions and when tackling different tasks.

Navigation Suite

The navigation sensor suite consists of a VectorNav IMU, Fizoptika FOG, a DVL and a barometric pressure depth sensor. A full state Kalman Filter is used to obtain much higher accuracy than each sensor can provide independently. The AUV navigation system is capable of performing accurate local and global navigation.

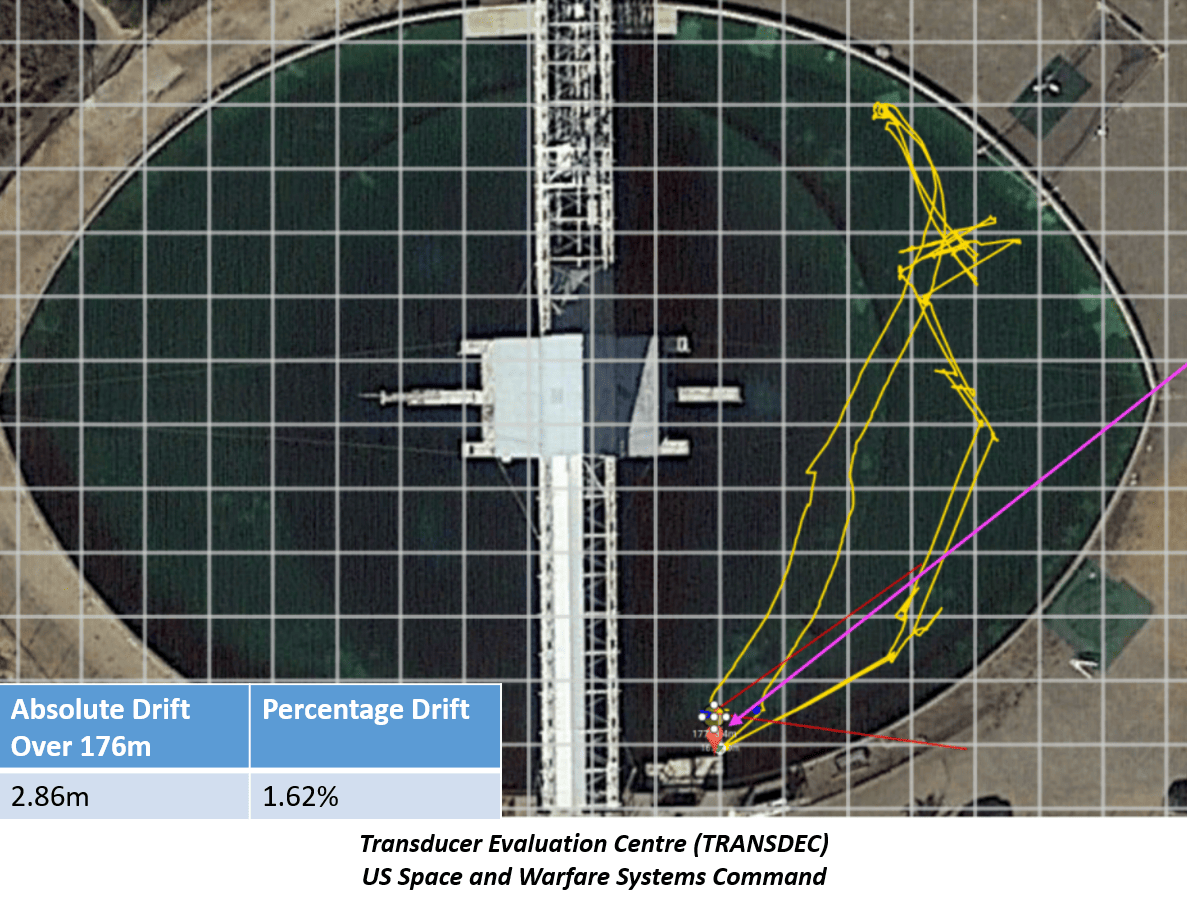

The current navigation system was evaluated over 176m with 1.62% of drift measured based on its DVL/IMU sensor fusion.

Autonomous Manipulation

With a highly accurate navigation suite and robust object perception and tracking, the AUV 4.1 is capable of fully autonomous manipulation of objects. We have tested various types of manipulators, ranging from grabbing arms, to marker droppers, to mini projectiles. The software on the Bumblebee AUV is capable of different types of manipulation.